Some thoughts on Nvidia regarding AI

Nvidia c(S)hips toward AI

Introduction:

Announced in August 1999 and released a month later the same year, GeForce 256 was the first GPU in the world invented by Nvidia, giving birth to PC Gaming as we know it now. Fast-forwarding 20 years later, in his unexpected Kitchen Keynote, Jenson Huang, the CEO of NVIDIA, hailed the 'Age of AI'.

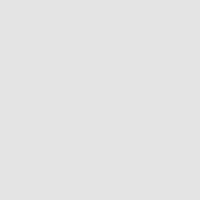

Early this year, Nvidia's market capitalisation reached a trillion dollars, just behind Amazon with a stock price that has quadrupled in less than 3 years partly stimulated by the increasing demand for chips in artificial intelligence (AI) systems.

What Nvidia is about:

Before jumping on some AI keywords, such as ChatGPT, the well-known AI language model spearheading the AI market, a short business description of Nvidia will help us decipher the market.

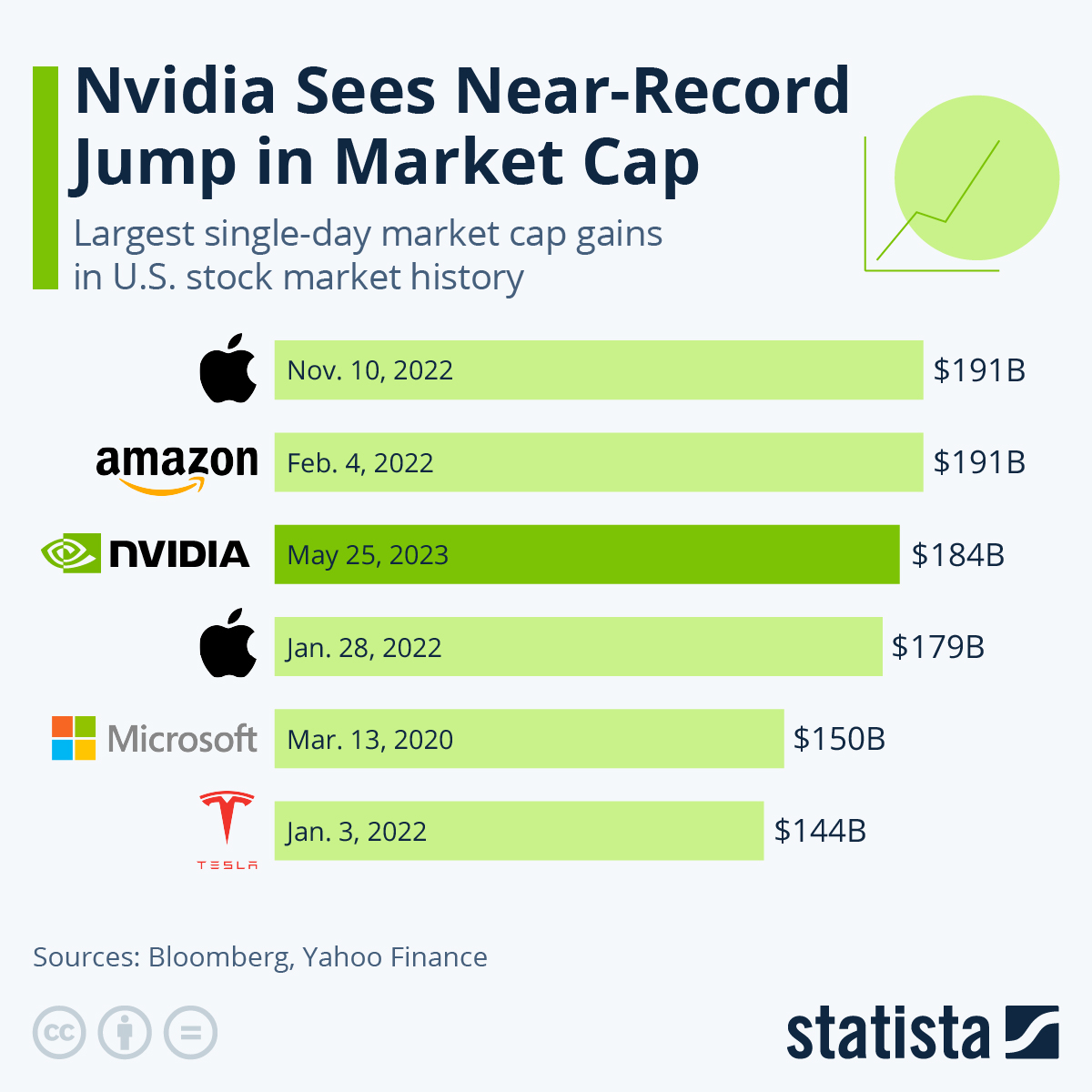

As quickly hinted above, The core business for Nvidia is graphic process unit (GPU).

Those GPUs were mainly used for graphic improvement (picture, video, gaming, display...) until 2006, when researchers at Stanford University discovered that GPUs had an impact more significant than CPUs (computer processing units) on running mathematical models, particularly mining cryptocurrencies and AI models.

The main difference between CPU and GPU is that the first runs process sequentially (large task) and the second runs multiple operations parallelly (smaller tasks), explaining the ongoing GPU race. That is to say, run larger tasks parallelly.

The 3 leaders of the GPU market are, by alphabetical order, AMD, Intel and Nvidia, with an array of GPU vendors:

Advanced Micro Devices Inc. (AMD)

Apple Inc.

Arm Ltd.

ASUSTeK Computer Inc.

Broadcom Inc.

EVGA Corp.

Fujitsu Ltd.

Galaxy Microsystems Ltd.

Gigabyte Technology Co. Ltd.

Imagination Technologies Ltd

Intel Corp.

International Business Machines Corp.

NVIDIA Corp.

Qualcomm Inc.

Samsung Electronics Co. Ltd.

SAPPHIRE Technology Ltd.

Zotac Technology Ltd.

Taiwan Semiconductor Manufacturing Co. Ltd.

The first use of GPUs outside the traditional computer world goes back to January 2009 with the appearance of bitcoin, a blockchain-based cryptocurrency.

Blockchain is a distributed ledger technology which contains unchangeable recorded transactions.

Simplifying Blockchain technology requires multi-parties interventions. Thus, GPUs become unavoidable.

Mining new cryptocurrencies (creating new blocks via computer calculations) is a tedious computing process primarily defined as a non-friendly one for the climate.

Bitcoin reached its peak value in late 2021 with repercussions of blockchain technologies on others than financial industries, such as tracking non-tangible assets.

The hype behind cryptocurrency reached its highest moment during the lockdown. It died out when life went back to normal during the year 2022.

The price of GPU was the witness of that period. Some may argue that other external factors, such as a decline in GPU production during the same period, impacted the GPU price.

AI: How long will this new trend last

In November 2022, OpenAI launched ChatGPT, an AI language model. OpenAI is a research laboratory working on AI. Microsoft is the leading investor in OpenAI, with $10 Billion in investment in coming years.

Microsoft started to invest in it as early as 2019. This year, Microsoft has launched an AI product called Microsoft Copilot, which interacts with its cash cow Office 365.

The role of Nvidia in the surge of AI in late 2022 and the beginning of 2023 is, as expected, hardware-based. The flagship products are A100 GPU for the data centre and its Chinese light version A800 GPU, allowing Nvidia to get around US control exports. These chips are used for servers to run the AI language models.

Lastly, NVidia disclosed a super chip named Grace CPU. It is a combination of CPU and GPU based on arms technology, high-performant in calculation and high-efficient in energy, to be seen in the new generation of datacentre.

We are at the start of the AI cycle. It has just begun...

Sources:

https://en.wikipedia.org/wiki/GeForce_256 (GeForce 256)

https://blogs.nvidia.com/blog/2020/10/05/age-ai-jensen-huang-cambridge-doca-bluefield-dpu-superpod-jetson-omniverse-videoconferencing/

(NVIDIA CEO Outlines Vision for ‘Age of AI’ in News-Packed GTC Kitchen Keynote)

https://www.bbc.com/news/business-65675027 (Nvidia: The chip maker that became an AI superpower)

https://www.ft.com/content/9dfee156-4870-4ca4-b67d-bb5a285d855c (China’s internet giants order $5bn of Nvidia chips to power AI ambitions)

https://www.cdw.com/content/cdw/en/articles/hardware/cpu-vs-gpu.html (CPU vs. GPU: What's the Difference?)

https://money.usnews.com/investing/articles/the-history-of-bitcoin (The History of Bitcoin, the First Cryptocurrency)

https://www.ibm.com/topics/blockchain (What is blockchain technology?)

https://www.searchenginejournal.com/history-of-chatgpt-timeline (History Of ChatGPT: A Timeline Of The Meteoric Rise Of Generative AI Chatbots)

https://www.cnbc.com/2023/01/10/microsoft-to-invest-10-billion-in-chatgpt-creator-openai-report-says.html (Microsoft reportedly plans to invest $10 billion in creator of buzzy A.I. tool ChatGPT)

https://blogs.microsoft.com/blog/2023/03/16/introducing-microsoft-365-copilot-your-copilot-for-work (Introducing Microsoft 365 Copilot – your copilot for work)

https://www.nvidia.com/en-us/data-center/grace-cpu/ (Grace CPU)